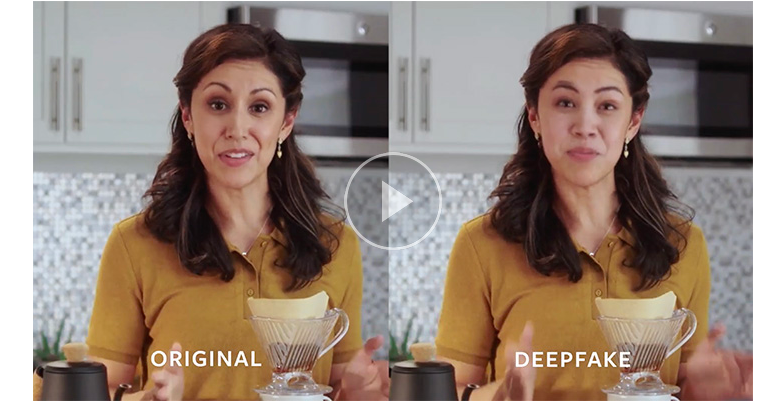

DeepFake has caught the attention of the world, especially the politicians, media and celebrities. Though the trick to manipulate a video has long been available, deepfake has made it much easier to do. Moreover, the resultant video is much more close to being real, which means it is very believable.

DeepFake technology is based on artificial intelligence (AI). It is used to manipulate or even create a video to present something that did not even occur. In deepfake technology, the AI studies a person’s face, and then accurately transposes it onto someone else’s expressions.

Because of the use of AI and machine learning, anyone can easily and quickly create a realistic-looking video. The software to create DeepFake video is easily available, and they don’t require any technical knowledge to operate. This has led to the massive misuse of this technology.

Anyone can create a deepfake video, which could have dire consequences, such as sparking international outrage, tarnishing anyone’s image, crashing stock market and more.

We have already seen a few examples of deepfake videos going viral, such as Obama’s public service announcement, Nancy Pelosi slowed down video, Zuckerberg speaks frankly video, Trump lectures Belgium and more.

Now, there is a growing concern that deepfake videos could be employed to sway voters in the 2020 presidential election. However, the biggest threat of deepfake videos is that if people start to take them at their face value, there are chances that everyone will start to doubt any video content altogether.

Recognizing such threats of the deepfake technology, authorities around the globe are coming up with legislation to restrict the unethical use of the technology. Legislations, however, are not fully sufficient to tackle the growing use of such technology.

Therefore, tech companies and research organizations are coming forward to help with developing apps, program and tools to detect deepfake videos and take them down quickly. For instance, researchers from the USC Information Sciences Institute (USC ISI) have created a tool to fight deepfakes.

To identify if a video is manipulated or not, this tool studies the faint face and head movements, along with artifacts in the files. As per the researchers, the tool can identify the video with up to 96% accuracy. This tool allegedly takes up less computing power and time.

Google also recently contributed to the development of deepfake detection tools. The search giant released “a large dataset of visual deepfakes” or deepfake videos. The idea is that researchers would use these videos to train their software to identify or spot deepfake videos and benchmark the performance of their detectors. Google said:

Using publicly available deepfake generation methods, we then created thousands of deepfakes from these videos. The resulting videos, real and fake, comprise our contribution, which we created to directly support deepfake detection efforts

Similarly, Facebook, Microsoft, the Partnership on AI coalition and several top universities are working together to help in the development of the deepfake detection tools. The aim of these companies is to develop a dataset of videos by organizing competitions.

Apart from these efforts, the companies deploy other usual measures such as using an algorithm to detect copyrighted content, an algorithm that splices the video into parts to trace its source using image search, reverse image search and manual verification of the users.

Experts, however, feel that such tools or program to spot AI-manipulated videos are not a permanent solution. Speaking to The Verge, professor at the University of Southern California and CEO of Pinscreen, Hao Li, said that going ahead deepfake detection tools may become useless. Li said:

at some point it’s likely that it’s not going to be possible to detect [AI fakes] at all. So a different type of approach is going to need to be put in place to resolve this

The current deepfake detectors rely on detecting “soft biometrics,” which are too subtle for the AI to mimic. These “soft biometrics” could be like how Trump raises his eyebrow when emphasizing a point or his lip movement before answering a question.

The deepfake detection tools learn how to spot these subtle movements by studying past videos of that person. However, going forward, these detectors would be rendered useless after the deepfake technology learns to mimic these subtle movements as well.

Thus, the need is to develop a coordinated plan and make these detectors useful going ahead. For the plan to be successful, there is a need that social platforms clearly define their policies on deepfake. Li says:

At the minimum, the videos should be labeled if something is detected as being manipulated, based on automated systems

Another part of the coordinated plan is to ensure continued monetary support to the researchers and organizations developing new deepfake detection tools. Also, the funding must be ensured to the researchers and organizations working on improving the current deepfake detection tools.

One useful technique that is currently in development is the use of Blockchain verification. Dare App, by Eristica Ltd. is using such a technique to counter deepfakes as winners on Eristica get paid in cryptocurrency.

Eristica’s business model is based on user posting challenging videos, and thus, deepfake could easily bring the company down. On Blockchain, one can view everyone’s transactions. This helps the company to detect things such as betting irregularities, including a sudden rise in high stake challenges.

Another deepfake detection tool that some researchers are working on is training mice to detect deepfake videos. As per the researchers, mice have an auditory system that is similar to humans. However, they can’t understand the words they hear, and this, limitation is what researchers plan to benefit from.

This is because mice won’t overlook any verbal signs as they won’t focus on getting the meaning of the word. For example, any wrong pronunciation of an alphabet or a word. The white paper of the research says:

We believe that mice are a promising model to study complex sound processing and that Studying the computational mechanisms by which the mammalian auditory system detects fake audio could inform next-generation, generalizable algorithms for spoof detection

Featured image from: ai.facebook.com

PiunikaWeb started as purely an investigative tech journalism website with main focus on ‘breaking’ or ‘exclusive’ news. In no time, our stories got picked up by the likes of Forbes, Foxnews, Gizmodo, TechCrunch, Engadget, The Verge, Macrumors, and many others. Want to know more about us? Head here.